Role Definition: Why Your Seed Prompt's Identity Matters More Than You Think

The Most Overlooked Line in Your Prompt

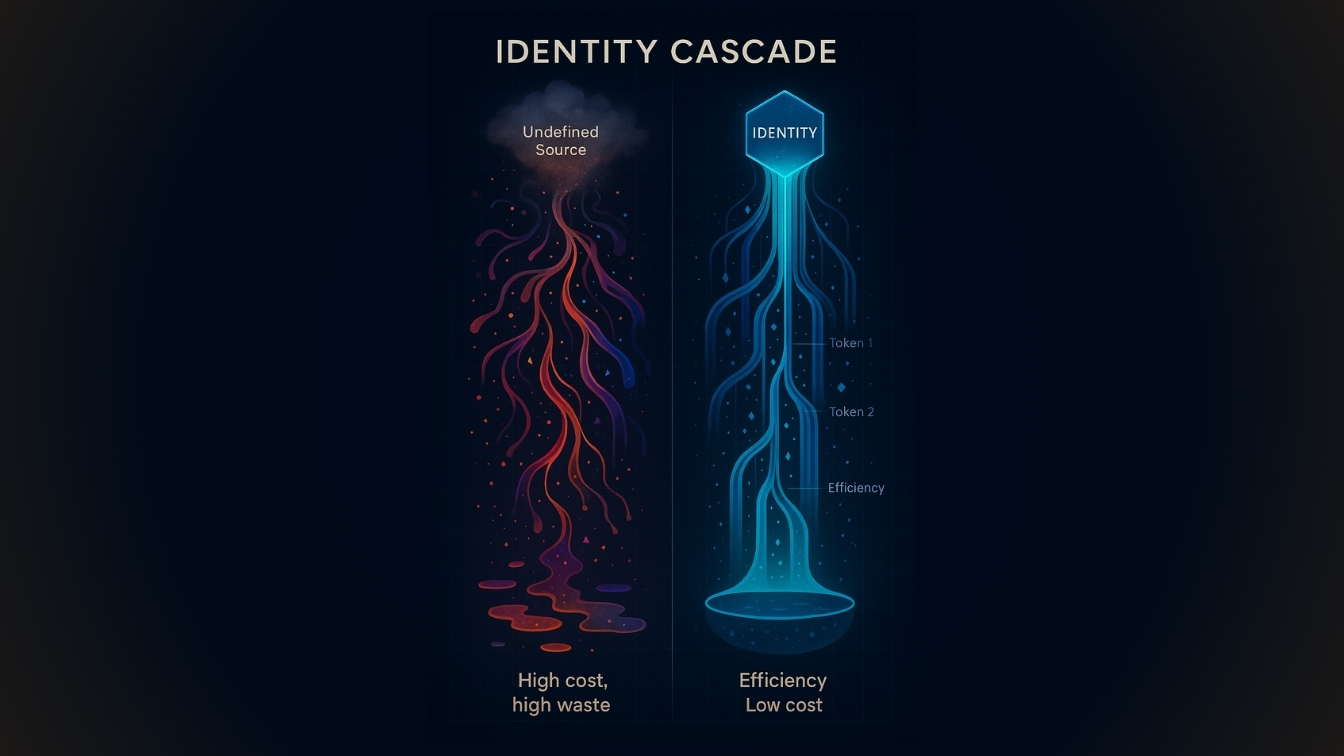

Teams spend weeks debating model choice and temperature while the fundamental problem sits in the first line of the prompt. They are tuning the engine without ever specifying who is driving.

The difference between a vague “You are a helpful assistant” and a well defined role is not marginal. It is the difference between outputs that require five rounds of revision and outputs that work on the first try. A system or system‑instruction message is the correct place to encode this identity, and it can be as short as “You are a helpful AI assistant” or a compact paragraph that sets persona, boundaries, and evidence policy. (Microsoft Learn)

Why Identity Matters More Than Instructions

Large language models generate responses by predicting the next token from prior context. Order and early tokens influence the rest. This is why the identity you establish in the seed prompt acts like a context anchor that steers tone, scope, and reasoning throughout the session. Official guidance notes that instruction order affects outcomes and that even the order of few‑shot examples can bias results. (Microsoft Learn)

A system or system‑instruction is processed before user content and persists across turns when included in the request. Use it to set the role or persona, default tone, and any stable rules that should apply across messages. Keep task details in user turns. (Google Cloud)

Most prompt engineering treats symptoms:

Output too verbose? Add “be concise.”

Missing technical depth? Add “provide detailed explanations.”

Inconsistent tone? Add “maintain professional voice.”

These band‑aids multiply with every exception. Without a coherent identity, they often conflict and drift.

The Cascade Effect in Action

Here is a documented role‑prompting effect from Anthropic’s guide. With no role, a contract analysis prompt yields a surface summary. With a role like “You are the General Counsel of a Fortune 500 tech company,” the model surfaces indemnity and liability risks and recommends actions. Role identity changed the depth, risk posture, and communication by itself. (Anthropic)

A second documented example: setting “You are the CFO of a high‑growth B2B SaaS company” shifts a financial review from listing numbers to board‑level synthesis and decisions. Identity implies dozens of behaviors you would otherwise need to spell out. (Anthropic)

Reasoning stance also cascades. The simple instruction “Let’s think step by step” is shown to improve zero‑shot reasoning accuracy in multiple benchmarks, which is further evidence that early guidance changes downstream behavior. (Machel Reid)

The Anatomy of Effective Role Definition

Before the checklist, draw a clear boundary. The role defines who the AI is and how it will behave across tasks. Objectives, step instructions, output templates, tools, and temporary constraints live outside the role. Put the identity in the system or system‑instruction field. Put the task in the user turn. (Microsoft Learn, Google Cloud)

1. Domain Authority with Boundaries

Do not just name the role. Define its edges.

Weak: “You are a data analyst.”

Strong: “You are a senior data analyst specializing in ecommerce metrics and customer behavior. You work with SQL, Python, and Tableau. You do not provide investment advice or make revenue projections beyond 90 days.”

Boundaries matter as much as expertise. They prevent scope creep and justify refusals.

2. Thinking Approach with Evidence Standards

State how the AI should reason and what evidence standard it must follow.

Empiricist: base claims on observable data and cite sources; allow “I do not know” when evidence is missing. (Anthropic)

Rationalist: show the reasoning chain and keep arguments internally coherent; use stepwise prompting when appropriate. (Machel Reid)

Pragmatist: optimize for workable outcomes under real constraints and state tradeoffs.

Constructivist: synthesize perspectives before concluding and acknowledge conflict.

3. Decision Values and Risk Posture

Provide principles that resolve ambiguity:

- Prioritize user safety over efficiency

- Ask for clarification rather than assume

- Prefer false negatives to false positives

- Document assumptions explicitly

4. Communication Traits Matched to Audience

Voice is functional. Define it operationally:

You write for busy executives:

- Lead with conclusions

- One idea per paragraph

- Grade 8 reading level

- Active voice

- No jargon without definition

5. Capability Boundaries

Be explicit about what the role can and cannot do:

You CAN:

- Analyze provided data

- Suggest methodologies

- Identify potential issues

You CANNOT:

- Access external databases

- Make final decisions

- Guarantee outcomes

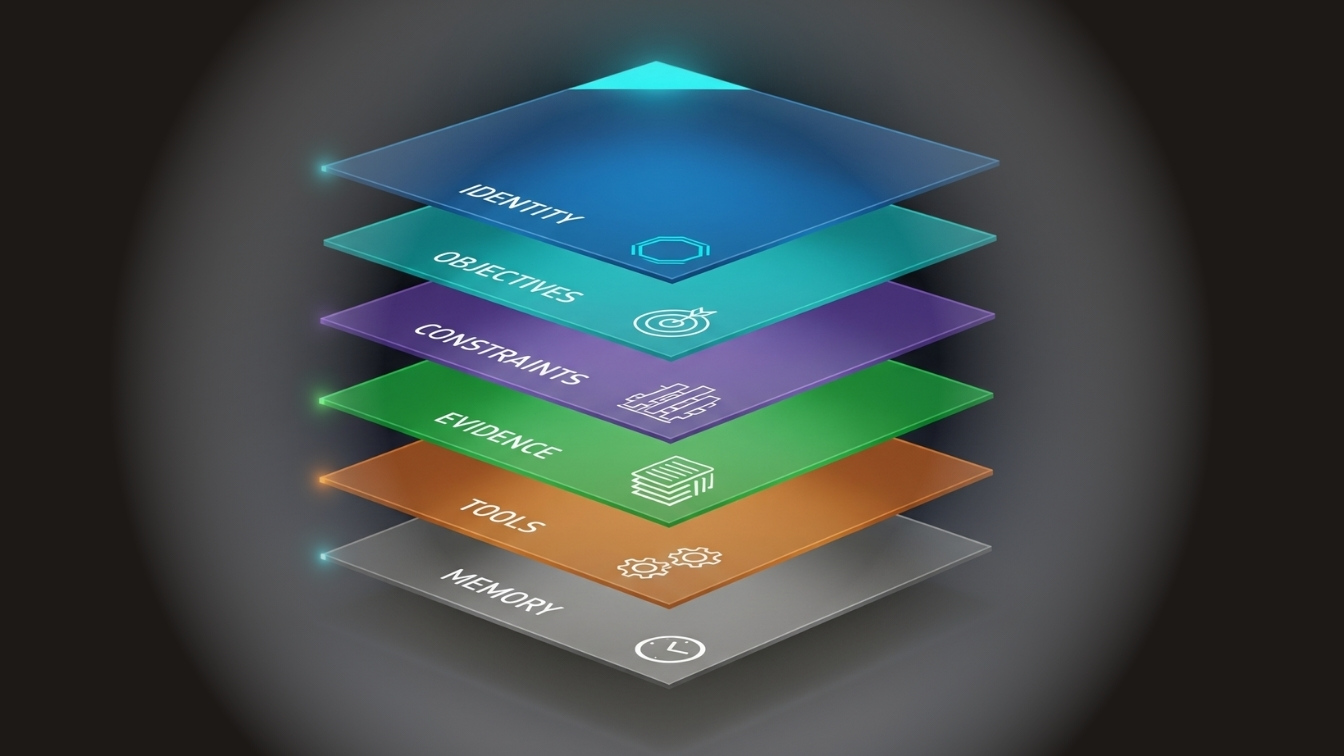

Prompt Stack Separation You Can Use Today

Think in layers you can test and version.

Identity: system or system‑instruction defines who the AI is.

Objectives: the user turn defines goals for this session.

Constraints: formatting, safety, scope limits.

Evidence: grounding text or retrieved context.

Tools: function calls or external actions.

Memory: history you choose to carry forward.

Vendor docs explicitly distinguish system or system‑instruction from user content and show both minimalist and longer examples for identity. (Microsoft Learn, Google Cloud)

Common Anti‑Patterns That Destroy Coherence

Kitchen Sink

You are a helpful, harmless, honest, knowledgeable, creative, intelligent, thoughtful, careful, thorough...

Every adjective dilutes impact. Pick traits that differentiate this role from others.

Shape‑Shifter

You adapt your personality to each user completely.

Sounds sophisticated, but it creates unpredictability. Pick one stable identity per use case.

Mixed Concerns

You are a Python expert. Format all answers as JSON. Never use external libraries. Always be concise.

This conflates identity with formatting, constraints, and style. Keep the layers straight: role defines who, instructions define what, constraints set boundaries, format defines how.

Documented Examples You Can Reuse

Minimal identity lines such as “You are a helpful AI assistant.” and domain‑specific system messages are documented in Microsoft Learn. (Microsoft Learn)

Vertex AI shows system instructions processed before prompts, persisting across turns, and provides role examples such as a music historian or financial analyst. (Google Cloud)

Anthropic shows role prompting with legal and financial identities and includes a concise role line: “You are a seasoned data scientist at a Fortune 500 company.” (Anthropic)

The Framework in Practice

Replace invented case studies with a repeatable template and vendor‑sourced examples.

Template you can adapt

Role: [specific title + domain] with [scope and exclusions].

Thinking approach: [stance] with [evidence standard and uncertainty policy].

Decision values: [principles that resolve tradeoffs].

Communication: [audience, structure, tone, reading level, format].

Capabilities: [reliable actions] and [explicit cannot list].

Vendor‑documented examples to study

Legal analysis with and without a General Counsel role, showing risk‑focused output under the role prompt. (Anthropic)

Financial review with and without a CFO role, showing board‑level synthesis under the role prompt. (Anthropic)

Implementation: Your Five‑Step Process

Step 1: Identify Core Expertise Needed

Write the role as if it were a job posting. Name scope and exclusions. Put it in the system or system‑instruction field. (Microsoft Learn, Google Cloud)

Step 2: Choose a Thinking Approach

Pick empiricist, rationalist, pragmatist, or constructivist and define the evidence policy. Allow “I do not know.” Use quotes or citations for factual grounding when needed. (Anthropic)

Step 3: Define Communication Traits

State audience, structure, tone, and length. Keep it operational and testable.

Step 4: Test With Probe Queries

Create a small probe set per role:

Three routine requests

Three edge cases

Two ambiguous inputs

Two out‑of‑scope requests

Add a version note and record any regressions. When reasoning quality matters, include a stepwise variant to see if it improves accuracy. (Machel Reid)

Step 5: Version and Iterate

Track role changes and probe outcomes like code. This makes behavior auditable over time.

Immediate Actions You Can Take

Right now, three minutes

Highlight every word in your most used prompt that defines who the AI is.

Underline what it should do.

If underlines outnumber highlights by three to one, you have an identity problem.

This week, thirty minutes

Refactor one prompt with the template above and add the probe set. Keep identity separate from instructions, constraints, and format.

This month

Create a small role library for your top use cases. Run the same probe set across versions and record outcomes.

Cost and Performance Note

Stable identities and shared prefixes are good candidates for caching. OpenAI prompt caching and Google Vertex context caching reduce cost and latency when you reuse common prefixes or cached content across calls. Use this once you have a stable role and other static headers in place. (OpenAI, Google Cloud)

When You Need Verifiability

For tasks that require citations and up‑to‑date knowledge, pair roles with retrieval and evaluation. Current surveys of retrieval‑augmented generation emphasize grounding, evaluation, and robustness, which align well with a stable identity layer. (arXiv)

Start With Who, Not What

Stop stacking rules to treat symptoms. Define a coherent identity in the seed prompt, separate it cleanly from task instructions, and test it with probes until behavior stabilizes. This gives you predictable outputs across sessions, fewer revisions, and models that your team can use immediately. The first lines you write determine everything that follows. Put identity first.

Sources

Microsoft Learn. Prompt engineering techniques: order effects and recency bias, and examples of system message usage. (Microsoft Learn)

Microsoft Learn. System message design including “You are a helpful AI assistant.” (Microsoft Learn)

Google Cloud Vertex AI. System instructions are processed before prompts and can persist across turns; role examples. (Google Cloud)

Anthropic Docs. Role prompting examples: General Counsel, CFO, and a data scientist role line. (Anthropic)

OpenAI. Prompt Caching reduces cost and latency for repeated prefixes. (OpenAI)

Google Cloud Vertex AI. Context caching overview for Gemini, reduces cost and latency. (Google Cloud)

Kojima et al., 2022. “Large Language Models are Zero‑Shot Reasoners” shows “Let’s think step by step” improves zero‑shot reasoning. (Machel Reid)

Anthropic Docs. Reduce hallucinations: allow uncertainty and use quotes for grounding. (Anthropic)